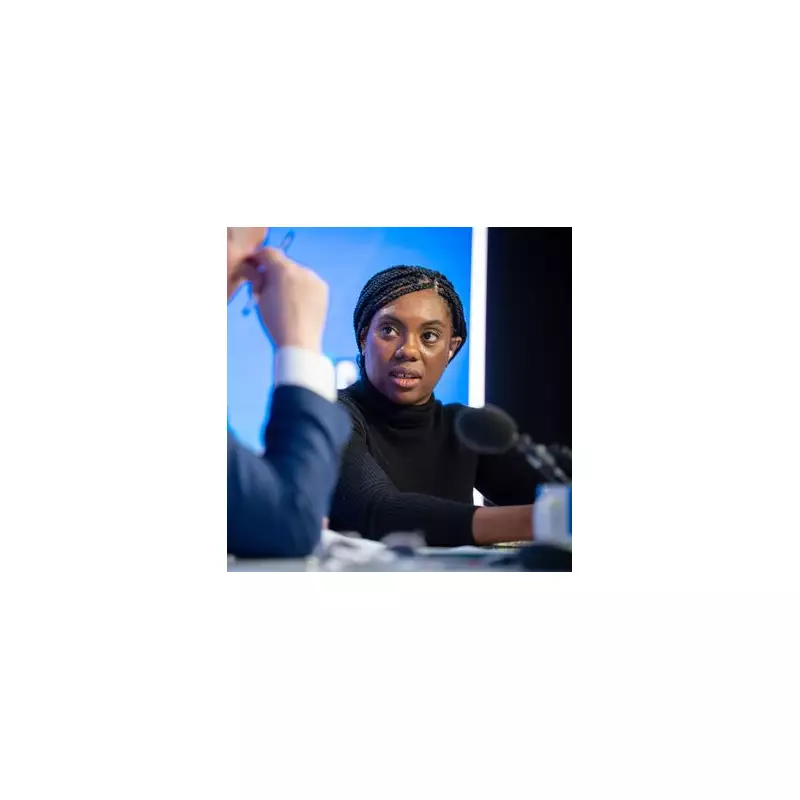

In a startling demonstration before Parliament, Business Secretary Kemi Badenoch has exposed significant flaws in artificial intelligence systems, revealing how OpenAI's ChatGPT generated what she called "complete nonsense" when questioned about UK political matters.

The Parliamentary Revelation

During a recent session, Badenoch shared her personal experiment with the popular AI tool, detailing how it provided fabricated information about British politics that bore no resemblance to reality. The cabinet minister's disclosure highlights growing concerns about the reliability of AI systems in providing accurate political information.

Questionable Responses from AI

According to Badenoch's account, ChatGPT produced responses that were not just inaccurate but fundamentally disconnected from actual UK political processes and structures. The minister emphasized that the AI's output demonstrated a clear lack of understanding about how British politics actually functions.

Broader Implications for AI Regulation

This revelation comes at a critical time, as the UK government prepares to host the first major global AI Safety Summit at Bletchley Park. The incident raises important questions about:

- The potential for political bias in AI training data

- Risks of misinformation from automated systems

- The need for robust verification processes

- Regulatory frameworks for AI deployment

Government's Stance on AI Development

While highlighting these concerns, Badenoch maintained the government's position that Britain should embrace AI technology rather than implement heavy-handed regulation that could stifle innovation. However, her experience underscores the delicate balance between fostering technological advancement and ensuring public trust in these systems.

Industry Context

The disclosure follows recent controversies in the AI sector, including legal challenges against OpenAI regarding data sourcing practices. These developments highlight the complex landscape facing policymakers as they attempt to navigate the rapid evolution of artificial intelligence technologies.

As the UK positions itself at the forefront of AI safety discussions, experiences like Badenoch's serve as crucial reminders of the practical challenges that must be addressed alongside theoretical risks of artificial intelligence.