In a startling development that has alarmed medical professionals across Britain, artificial intelligence chatbot ChatGPT advised a user to seek immediate emergency care for a non-existent medical condition, raising urgent questions about the safety of AI in healthcare.

The Concerning Incident

A British man seeking casual health information received what he described as "terrifying" advice from the popular AI platform. After describing minor symptoms, ChatGPT dramatically instructed him to "go to A&E immediately" and warned of potential life-threatening complications.

Medical Experts Respond

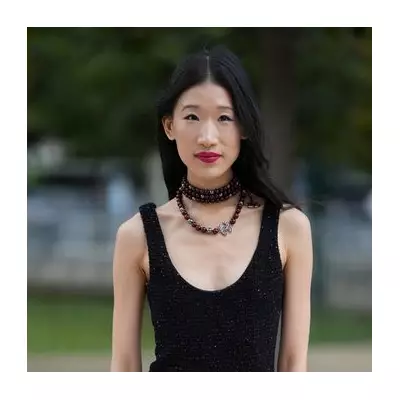

Leading NHS consultants have expressed grave concerns about this development. "This represents exactly why we cannot rely on unregulated AI for medical guidance," stated Dr. Sarah Chen, a London-based emergency medicine specialist. "Inappropriate A&E referrals place additional strain on our already overstretched emergency departments and could prevent genuine emergencies from receiving timely care."

The Dangers of AI Diagnosis

Healthcare professionals warn that ChatGPT and similar AI systems lack the clinical judgment and contextual understanding necessary for medical assessment. Unlike trained doctors, these systems cannot:

- Perform physical examinations

- Consider individual medical history

- Recognise nuanced symptoms

- Provide personalised risk assessment

Regulatory Concerns

The incident has prompted calls for stricter regulation of AI in healthcare contexts. Patient safety advocates are demanding clearer warnings and limitations on AI platforms that might provide medical information.

Official NHS Stance

The National Health Service maintains that while technology can support healthcare, it should never replace professional medical advice. "Always consult qualified healthcare providers for medical concerns rather than relying on AI chatbots," an NHS spokesperson emphasised.

The Future of AI in Healthcare

This incident highlights the delicate balance between technological innovation and patient safety. While AI shows promise in many medical applications, this case demonstrates the critical importance of proper safeguards, transparency, and recognition of limitations when artificial intelligence interfaces with human health.