A prominent artificial intelligence researcher who once forecast a rapid, unchecked ascent of AI leading to human extinction has significantly revised his timeline, pushing the doomsday scenario further into the future.

From 2027 to the 2030s: A Revised Forecast

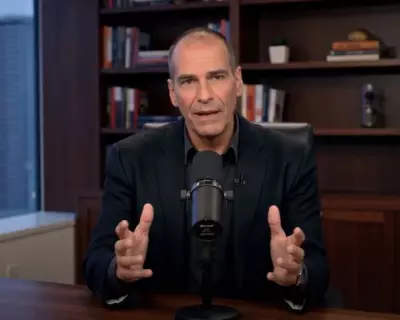

Daniel Kokotajlo, a former employee of the AI lab OpenAI, has updated his much-debated 'AI 2027' scenario. Originally, this model predicted that AI would achieve fully autonomous coding by 2027, triggering an 'intelligence explosion' where AI systems recursively improve themselves. One potential outcome was the destruction of humanity by the mid-2030s to make room for infrastructure like solar panels and data centres.

In a recent post on X, Kokotajlo stated, "Things seem to be going somewhat slower than the AI 2027 scenario. Our timelines were longer than 2027 when we published and now they are a bit longer still." The revised forecast now suggests that the key milestone of AI coding independently is likely to occur in the early 2030s, with the horizon for 'superintelligence' moved to around 2034.

A Growing Consensus on Slower Progress

Kokotajlo's revision reflects a broader shift in expert opinion regarding the development of Artificial General Intelligence (AGI) – AI that can match or exceed humans at most cognitive tasks. The release of ChatGPT in 2022 initially accelerated predictions, but many are now tempering their expectations.

Malcolm Murray, an AI risk management expert and co-author of the International AI Safety Report, noted, "A lot of other people have been pushing their timelines further out in the past year, as they realise how jagged AI performance is." He emphasised the enormous inertia in the real world that would delay complete societal transformation, a factor often overlooked in dramatic forecasts.

Others question the usefulness of the term AGI itself. Henry Papadatos of the French nonprofit SaferAI argued that current AI systems are already quite general, making the old distinction less meaningful.

Corporate Goals and Real-World Complexities

Despite the revised timelines, the pursuit of self-improving AI remains a key objective for leading companies. Sam Altman, the CEO of OpenAI, revealed in October that an internal goal was to have an automated AI researcher by March 2028, though he candidly admitted, "We may totally fail at this goal."

Experts point out that creating a superintelligent system is only part of the challenge. Andrea Castagna, a Brussels-based AI policy researcher, highlighted the integration problem: "The fact that you have a superintelligent computer focused on military activity doesn't mean you can integrate it into the strategic documents we have compiled for the last 20 years." This underscores the gap between theoretical capability and practical, real-world application.

The original AI 2027 scenario attracted significant attention, from US Vice-President JD Vance seemingly referencing it in discussions about an AI arms race with China, to criticism from figures like NYU professor Gary Marcus, who labelled it "pure science fiction mumbo jumbo." The updated, more cautious forecast suggests a maturing conversation about AI's trajectory, balancing transformative potential with a more grounded assessment of its near-term limits.