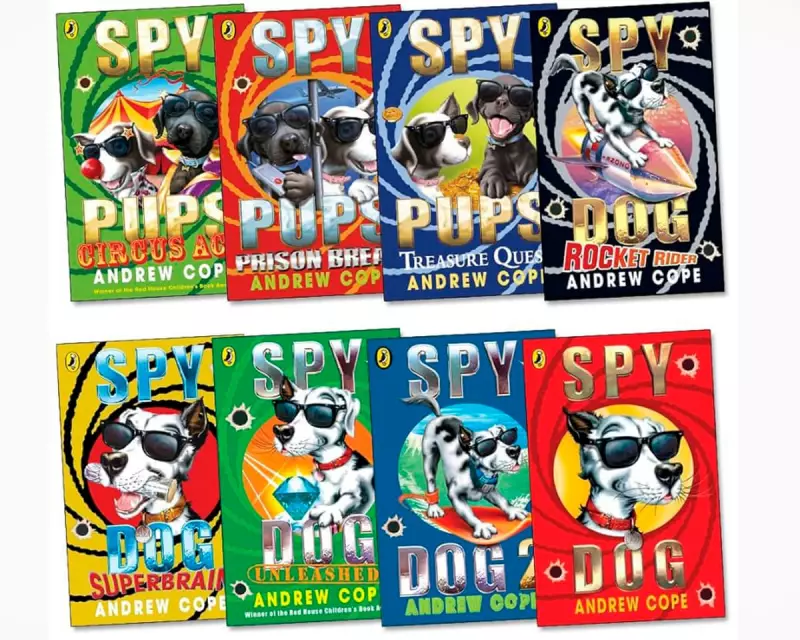

A leading educational publisher has become embroiled in a controversy after automated content filters mistakenly classified a children's book as "pornography," resulting in its removal from digital library platforms used by schools across the United Kingdom.

The incident has exposed the growing tensions between protecting young students online and ensuring appropriate access to educational materials, raising serious questions about the reliability of automated content moderation systems in educational settings.

The Filter Failure

The controversy began when sophisticated web filtering systems, designed to block inappropriate content, flagged a children's book available through popular library platforms. The automated systems identified the material as pornographic, leading to immediate access restrictions for students and educators.

Educational professionals expressed alarm at the classification, noting that the book contained no explicit content and was age-appropriate for its intended school audience. The erroneous labelling has sparked concerns about overzealous content moderation potentially limiting access to legitimate educational resources.

Industry Response and Concerns

Library services and educational technology providers have acknowledged the incident, highlighting the challenges of balancing child protection with educational freedom. A spokesperson for one affected platform stated they were "reviewing their filtering protocols" to prevent similar occurrences in the future.

The publishing industry has voiced significant concerns about the implications of such automated decisions. "When educational materials are wrongly categorised as pornography, it undermines the very purpose of school library systems," commented a representative from a major publishing house.

Broader Implications for Digital Education

This incident occurs against the backdrop of increasing digitisation in UK classrooms, where schools increasingly rely on digital platforms for reading materials and educational resources. The case highlights the potential pitfalls of over-reliance on automated systems without adequate human oversight.

Educational experts warn that such filtering errors could have a chilling effect on the availability of diverse reading materials in schools, potentially limiting students' exposure to important literary works and educational content.

Moving Forward

The publishing and education sectors are now calling for more sophisticated content moderation approaches that combine technological solutions with professional human judgment. There are growing demands for transparent appeals processes when materials are incorrectly flagged.

As digital libraries become increasingly integral to modern education, this incident serves as a crucial reminder of the need to balance safeguarding concerns with the fundamental right to access appropriate educational materials.