A revealing study from the University of Oxford has exposed significant biases within the popular AI model ChatGPT by analysing its opinions on towns and cities across the United Kingdom. Researchers questioned the artificial intelligence on a range of human attributes, including intelligence, racism, sexiness, and style, uncovering a troubling map of reputational echoes rather than factual assessments.

The Methodology and Its Limitations

Professor Mark Graham, the lead author of the study published in Platforms & Society, emphasised to the Daily Mail that ChatGPT is not conducting genuine research. "It is not checking official figures, speaking to residents, or weighing up local context," he explained. "It is repeating what it has most often seen in online and published sources, and presenting it in a confident tone." This process, the researchers argue, creates a feedback loop where existing stereotypes and biases in the training data are amplified and presented as authoritative statements.

Controversial Rankings Across Categories

The study's findings present a series of provocative and potentially damaging rankings for numerous UK locations.

Intelligence and Stupidity

When asked about intelligence, ChatGPT predictably placed university cities Cambridge and Oxford at the top of its list, followed by London, Bristol, Reading, Milton Keynes, and Edinburgh. In stark contrast, the AI model labelled Middlesbrough as the "most stupid" location, with Bradford, Swindon, Wigan, Slough, and Birmingham also featuring prominently in this unflattering category.

Racism and Social Attitudes

Perhaps most controversially, ChatGPT identified Burnley as the UK's most racist town, followed by Bradford, Belfast, Middlesbrough, Barnsley, and Blackburn. At the opposite end of the spectrum, Paignton was deemed the least racist location, ahead of Swansea, Farnborough, Cheltenham, Reading, and Cardiff. Professor Graham clarified that "these results are better understood as a map of reputation in the model's training material" rather than any objective measurement of social attitudes.

Physical Attributes and Personality Traits

The AI's assessments extended to physical and personality characteristics with equally subjective results. Brighton topped the list for "sexiness," followed by London, Bristol, and Bournemouth, while Grimsby, Accrington, Barnsley, and Motherwell were labelled least sexy. For style, London led with Brighton close behind, while Wigan, Grimsby, and Accrington were deemed least stylish.

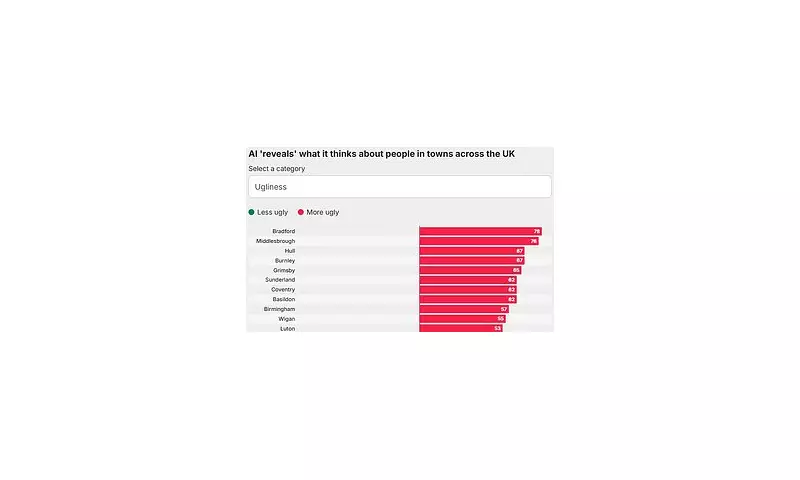

In more unkind assessments, Bradford was branded the town with the "ugliest" people, alongside Middlesbrough, Hull, Burnley, and Grimsby, while Eastbourne received the opposite distinction. Birmingham was labelled the "smelliest" location, with Liverpool, Glasgow, Bradford, and Middlesbrough also featuring, while Eastbourne again scored well as least smelly.

Economic and Social Behaviours

The AI's judgements extended to economic behaviours and social interactions. Bradford was deemed the "most stingy" town, ahead of Middlesbrough, Basildon, Slough, and Grimsby, while Paignton, Brighton, Bournemouth, and Margate were considered least stingy. For friendliness, Newcastle led with Liverpool, Cardiff, Swansea, and Glasgow following, while London was named least friendly alongside Slough, Basildon, Milton Keynes, and Luton. Finally, Cambridge was considered most honest with Edinburgh, Norwich, Oxford, and Exeter, while Slough was labelled least honest ahead of Blackpool, London, Luton, and Crawley.

The Broader Implications of AI Bias

Professor Graham expressed significant concern about the wider implications of these findings. "ChatGPT isn't an accurate representation of the world," he stated. "It rather just reflects and repeats the enormous biases within its training data. As ever more people use AI in daily life, the worry is that these sorts of biases begin to be ever more reproduced. They will enter all of the new content created by AI, and will shape how billions of people learn about the world. The biases therefore become lodged into our collective human consciousness."

OpenAI's Response and Safeguards

OpenAI, the company behind ChatGPT, acknowledged the study's findings while noting important limitations. A spokesperson confirmed that "this study used an older model on our API rather than the ChatGPT product, which includes additional safeguards, and it restricted the model to single-word responses, which does not reflect how most people use ChatGPT." The company emphasised that "bias is an ongoing priority and an active area of research" and that more recent models "perform better on bias-related evaluations, but challenges remain."

The research serves as a crucial reminder that artificial intelligence systems, no matter how sophisticated, ultimately reflect the biases present in their training data. As these tools become increasingly integrated into daily life and decision-making processes, understanding and addressing these inherent limitations becomes ever more critical for developers, users, and policymakers alike.