Entertainment

Global Lens: The Week's Top 20 Photographs Capture World's Stories

Explore a compelling visual journey through the week's global events, from political protests to cultural celebrations, captured in 20 striking images by leading photojournalists.

Sports

Kirk Herbstreit Criticised ESPN Over 'Oversaturated' College Football Bowl Season

ESPN analyst Kirk Herbstreit reveals he told bosses there are too many 'meaningless' college football bowl games, a view that did not go down well at the network. Read the full story.

Politics

Palestine Hunger Striker Loses Speech After 61 Days Without Food in UK Prison

Heba Muraisi, on hunger strike in a Yorkshire prison in solidarity with Palestine Action, reports losing her ability to form sentences. Lawyers warn of 'potential death'.

Crime

Police Search for Missing 17-Year-Old Tourist Last Seen in London's Chinatown

Met Police urgently seek information on Sarra, 17, last seen in Chinatown/Covent Garden on 23 December. She was wearing a white hoodie and trousers. Call 999 with info.

Health

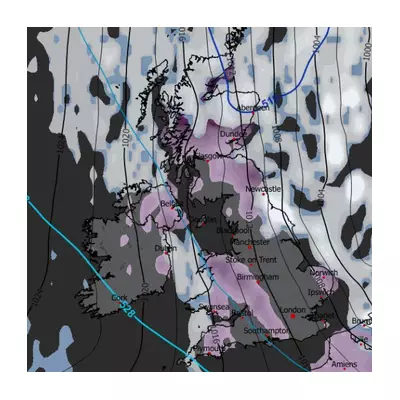

Weather

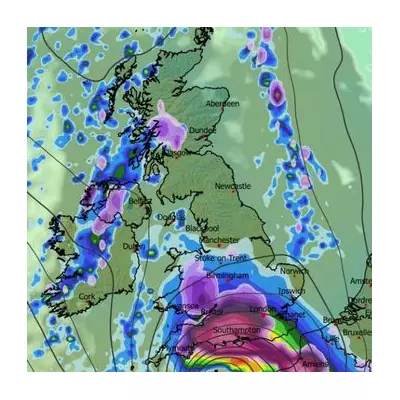

Arctic Freeze to Grip UK for Up to a Week

The Met Office warns a brutal Arctic freeze will last up to a week, with temperatures struggling above 0°C and significant snowfall expected. Health alerts upgraded as vulnerable warned.

108 UK areas told to pack 9 items for snow and ice

The Met Office has issued urgent weather warnings for snow and ice across the UK, urging motorists in 108 areas to keep nine essential items in their cars until Monday.

Arctic Freeze Grips UK with Heavy Snow and Amber Warnings

The Met Office warns of a brutal Arctic freeze lasting a week, with temperatures struggling above 0°C and heavy snow causing disruption. Health alerts upgraded as vulnerable face severe risks.

West Bay cliff collapses on beach in dramatic rockfall

Terrifying footage shows a cliff face crashing down on Dorset's Jurassic Coast, forcing people to flee. Authorities warn of further rockfall risks. Read the full story.

Second major snow blizzard forecast for UK next week

Fresh weather maps indicate a powerful snow band will sweep the UK from January 9, with London expecting 2 inches and Scotland facing up to 15 inches. Read the full forecast.

Tech

Get Updates

Subscribe to our newsletter to receive the latest updates in your inbox!

We hate spammers and never send spam

Environment

British Isles Geography: Thursday Challenge

Thursday means geography challenge time! Test your knowledge of the British Isles with questions about landscapes, cities, rivers, and famous landmarks.