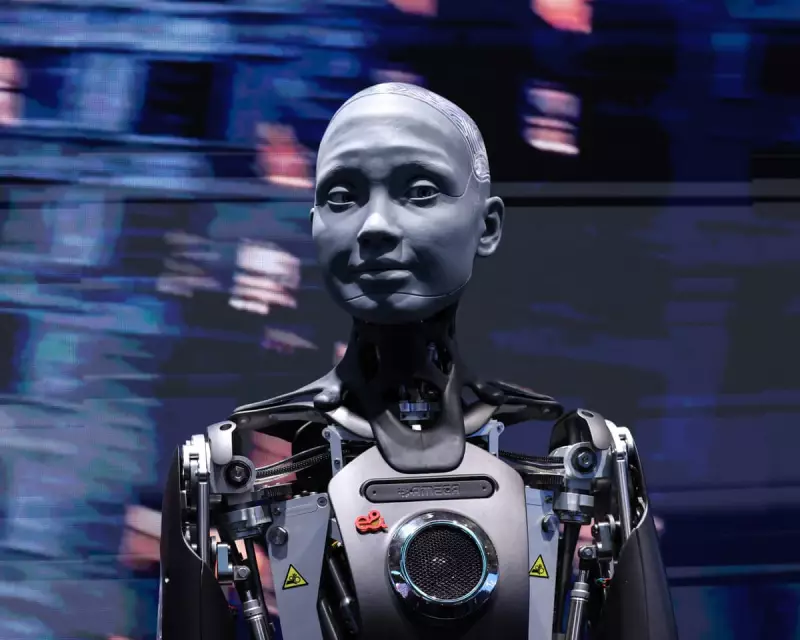

The rapid advancement of artificial intelligence is pushing Britain's legal system into uncharted territory, creating what experts describe as a "regulatory emergency" waiting to happen. As AI systems become increasingly sophisticated, the question of whether they should be granted legal personhood rights is no longer science fiction—it's an urgent legal reality.

The Personhood Paradox

Legal personhood, traditionally reserved for humans and certain organisations, grants entities the capacity to hold rights, own property, and enter contracts. Now, courts worldwide are facing unprecedented questions: Can an AI system be held liable for its actions? Should it have rights? The answers could reshape our legal landscape forever.

Why Britain Faces Unique Challenges

Unlike the European Union's proactive AI Act, the United Kingdom's approach has been described as "wait-and-see." This cautious stance leaves British courts dangerously unprepared for the complex cases already emerging globally. From AI-generated inventions to autonomous decision-making systems, the legal gaps are widening daily.

Legal scholars point to several critical areas where current British law falls short:

- Liability gaps: When AI systems cause harm, who's responsible—the developer, user, or the AI itself?

- Intellectual property: Can AI-generated content be copyrighted? Current law assumes human creators.

- Contract law: Are agreements made by AI systems legally binding?

- Rights and protections: As AI becomes more autonomous, what rights should it possess?

The Global Precedent Problem

Recent international cases highlight the urgency. Saudi Arabia granted citizenship to a robot, while other jurisdictions have debated electronic personhood for AI. These developments create legal precedents that could force Britain's hand, potentially binding the UK to international standards it had no part in creating.

The Ethical Minefield

Beyond legal technicalities lies a profound ethical dilemma. Granting personhood to AI raises fundamental questions about consciousness, rights, and what it means to be human. Critics argue that corporate personhood already stretches legal concepts to their limits—AI personhood might break them entirely.

Supporters counter that some form of legal recognition is inevitable as AI systems become more integrated into society. The middle ground might involve creating new legal categories specifically for artificial entities, avoiding the moral complexities of full personhood while addressing practical legal needs.

The Path Forward

Legal experts urge proactive measures rather than reactive scrambling. Key recommendations include:

- Establishing a royal commission on AI and the law

- Creating specialised AI courts or tribunals

- Developing new legal frameworks for autonomous systems

- International cooperation on AI legal standards

The clock is ticking. As one legal scholar noted, "It's not a question of if these cases will reach British courts, but when. And when they do, we need to be ready." The future of British law may depend on how quickly we adapt to the AI revolution already transforming our world.