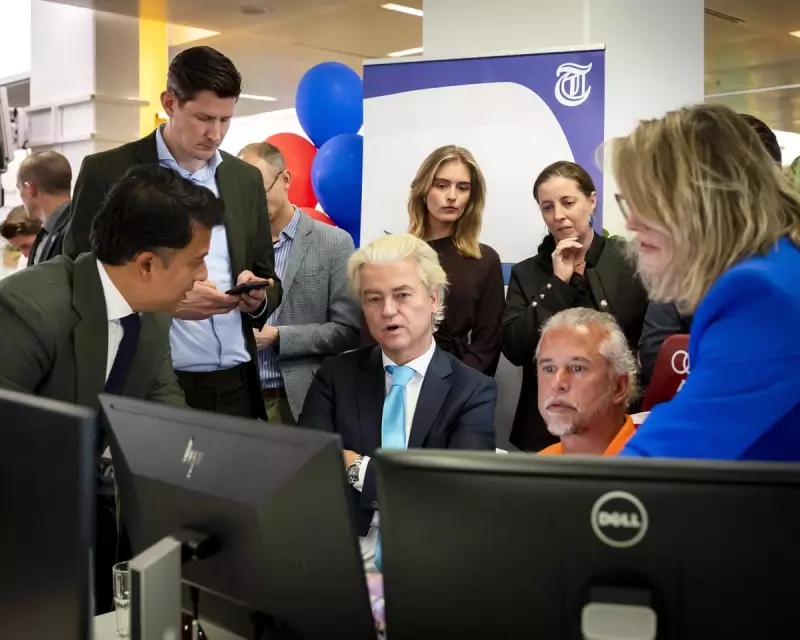

A startling investigation from the Netherlands has exposed serious flaws in popular AI chatbots, revealing they frequently provide unreliable and politically biased advice to voters seeking election guidance.

Democracy at Risk

The Dutch consumer watchdog Consumentenbond conducted comprehensive testing on several leading AI systems, including ChatGPT, Google Gemini, and Microsoft Copilot. Their findings paint a concerning picture of artificial intelligence's readiness to handle politically sensitive queries.

Inconsistent and Dangerous Guidance

Researchers discovered that identical questions posed to the same chatbot often yielded completely different answers. More alarmingly, some systems provided blatantly incorrect information about voting procedures and eligibility that could prevent citizens from exercising their democratic rights.

Political Bias Exposed

The investigation uncovered clear political leanings in how different chatbots responded to queries. When asked which political party best aligned with a user's stated values, the AI systems frequently recommended different parties despite identical input, suggesting embedded biases in their training data.

Testing Methodology

The watchdog employed rigorous testing procedures:

- Multiple rounds of identical political questions

- Analysis of response consistency across platforms

- Evaluation of factual accuracy on voting procedures

- Assessment of political recommendation patterns

Industry Response

While some AI companies acknowledged the issues and pledged improvements, others defended their systems' performance. The variations in response highlight the ongoing challenges in creating politically neutral artificial intelligence.

Broader Implications

This research raises urgent questions about the role of AI in democratic processes worldwide. With multiple countries approaching election seasons, the potential for AI systems to inadvertently or deliberately influence voter behaviour cannot be ignored.

The Dutch findings serve as a crucial warning about deploying increasingly sophisticated AI tools in politically charged environments without proper safeguards and transparency.