A popular social media account that amassed hundreds of thousands of followers by sharing videos about Australian wildlife has been exposed as a fabrication, sparking widespread accusations of 'digital blackface' and cultural appropriation. The figure, known as 'Bush Legend', is not a real Indigenous man but an artificial intelligence-generated avatar.

The Unmasking of a Digital Persona

The account, which has since changed its name on Meta platforms to Keagan Mason, featured dozens of videos of a digitally created man set against enhanced backdrops resembling the Australian Outback. In many clips, the AI character sported markings and adornments strikingly similar to traditional cultural elements from Aboriginal communities. The videos, which often resembled enthusiastic wildlife explainers in the style of the late Steve Irwin, gained the account more than 200,000 followers on Facebook and Instagram.

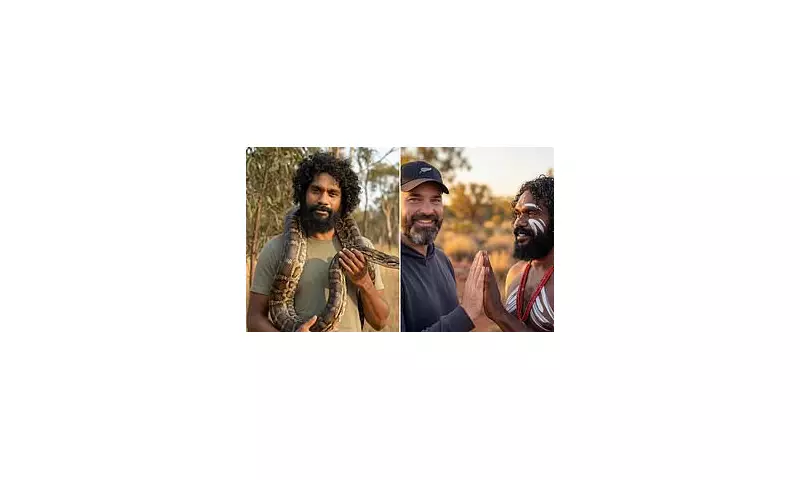

However, the creator behind the profile is reportedly a foreign national living in New Zealand. This revelation came to light after a profile picture was uploaded showing the AI-generated character standing beside a white man wearing a cap featuring the New Zealand flag. The cultural markings seen in the videos are believed to have been added without the consent or consultation of Aboriginal communities.

Backlash and Accusations of Exploitation

The disclosure triggered a fierce backlash on social media, with users condemning the act of masquerading as an Indigenous wildlife expert. One critic labelled the practice 'digital blackface', while another stated, 'It's all fake and until I'm proved otherwise it's exploiting Aboriginal culture.'

In a video uploaded on January 10, the AI-generated figure addressed followers, claiming the platform was 'not for profit' but intended to spread knowledge. 'I'm not here to represent any culture or group and this channel is simply about animal stories,' the avatar said, as the song 'Didgeridoo Outback' played in the background. The account's Instagram biography now states: 'This page uses AI-generated visuals to share wildlife stories for education and awareness. The focus is on animals and nature only.'

Despite these claims, many were left unimpressed. Torres Strait Islander musician Kee'ahn challenged the creator, asking, 'If it's just animal stories - don't use the likeness of Aboriginal people? Don't use Yidaki/Didgeridoo music?' They added, 'It's obvious the kind of cultural image you're trying to push and it's unethical because it's not real.' Other users called the act 'completely disrespectful' and demanded the creator 'use your own face instead of appropriating other peoples' and culture.'

Legal and Cultural Experts Weigh In

Stephen Gray, a senior lecturer at Monash University's Faculty of Law, told the Daily Telegraph that the educational defence did not shield the account from scrutiny. 'I think the excuse that it's educational or promoting Indigenous cultures is pretty poor if it's not produced [by Indigenous people],' he said. Gray emphasised that the content was 'not consistent with Indigenous law and culture' and connected it to a 'fairly long line, for many decades, of various kinds of appropriation' that causes a 'post-traumatic syndrome for Indigenous people.'

The controversy raises significant questions about ethics, authenticity, and cultural respect in the age of generative AI. The account had previously promoted paid subscriptions, though the avatar later claimed he was 'not asking anyone for money' and that videos were 'free to watch, with no obligations'. The Daily Mail has attempted to contact both the account owner and Meta for comment on the growing criticism.