Entertainment

Coleen Nolan's Weight Loss Journey Wows Loose Women Fans

Coleen Nolan, 60, stuns fans with behind-the-scenes photos showcasing her trim figure after a health wake-up call. The Loose Women panellist reveals her pre-diabetes diagnosis and new diet.

Sports

Arsenal's Kai Havertz Ruled Out of Tottenham Clash with New Injury Setback

Arsenal forward Kai Havertz will be absent for the crucial north London derby against Tottenham after sustaining a fresh injury, compounding existing long-term fitness concerns at the club.

Politics

Judge Orders Trump Administration to Return Illegally Deported Venezuelans

A federal judge has mandated the Trump administration to facilitate the return of Venezuelan men illegally deported to a Salvadoran prison, citing flagrant due process violations.

Crime

Systemic Failings Exposed in Marten Baby Tragedy, Thousands of Children Remain at Risk

A national review reveals systemic gaps in support for parents after child removal, following the death of Constance Marten's baby. Experts warn thousands of children remain vulnerable.

Business

Health

Weather

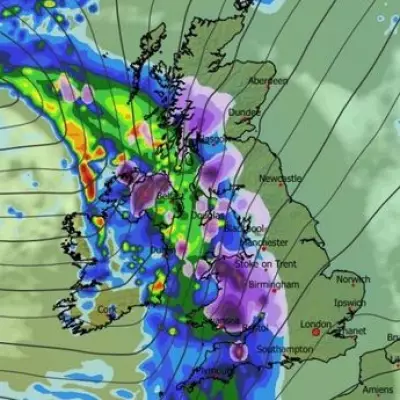

Twin Blizzards to Bury UK Cities with 19 Inches of Snow

Advanced weather maps reveal two major blizzards will hit the UK, bringing up to 19 inches of snow to cities like London and Birmingham, with intense flurries expected.

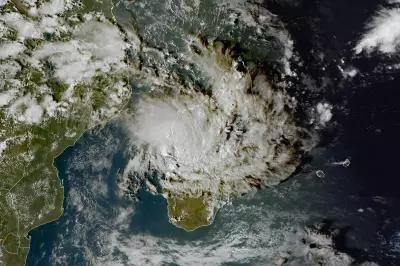

Cyclone Gezani Kills 20 in Madagascar, Causes Widespread Damage

Tropical Cyclone Gezani has struck Madagascar, resulting in at least 20 fatalities and severe infrastructure damage, with thousands evacuated and warnings of further storms ahead.

Snow Warning for 26 UK Cities on Thursday and Friday

The Met Office issues yellow warnings as snow is forecast to sweep from Scotland southwards, with up to 10cm expected in some areas and travel disruption likely.

UK's Record 42-Day Rain Deluge: Causes and Forecast

The UK faces a record-breaking 42 consecutive days of rainfall, with forecasters warning of continued downpours and flooding. A stalled low-pressure system is to blame.

UK Cold Weather Alert as Temperatures Drop to -4C

The UK Health Security Agency has issued a yellow cold weather health alert for large parts of the UK from Friday to Monday, warning of risks to vulnerable people as temperatures fall below zero.

Tech

Get Updates

Subscribe to our newsletter to receive the latest updates in your inbox!

We hate spammers and never send spam

Environment

UK Geography Mastery: Wednesday Test

How well do you know UK geography? Take our Wednesday test covering all aspects of British physical and human geography with 100 multiple-choice questions.