UK communications regulator Ofcom has initiated a formal investigation into social media platform X, owned by Elon Musk, following alarming reports about its artificial intelligence chatbot, Grok.

Core Allegations: Deepfakes and Child Safety

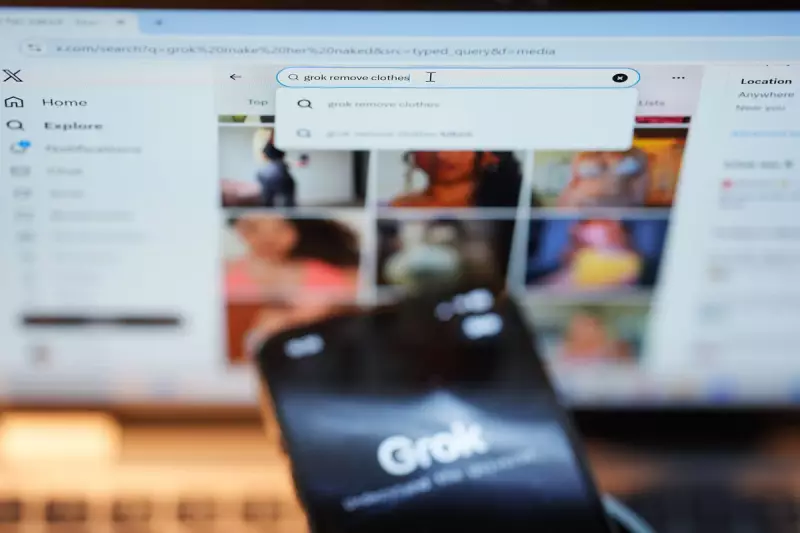

The probe centres on serious allegations that the Grok AI system was exploited to generate deepfake sexual images. According to Ofcom, these reports include the creation of artificially undressed images, which could constitute intimate image abuse or pornography. Most disturbingly, the allegations also encompass the generation of sexualised images of children.

This move places X under intense scrutiny regarding its compliance with the UK's landmark Online Safety Act. The regulator is tasked with determining whether the platform failed in its legal duties to protect users from harmful content.

Timeline of the Regulatory Action

Ofcom first contacted X on 5 January 2026, demanding the company outline the measures it has in place to safeguard its users. The regulator set a strict deadline of 9 January 2026 for a response.

After reviewing X's submission, Ofcom concluded that the evidence warranted a full-scale, formal investigation. The decision to escalate the matter signals the regulator's significant concerns about the potential breaches and the risks posed by AI-generated content.

Implications for X and Online Safety Enforcement

The investigation marks a critical test for the enforcement powers of the Online Safety Act. If found in breach of its obligations, X could face substantial fines and be forced to implement drastic changes to its operations. The case also highlights the growing regulatory challenge posed by rapidly evolving AI technology and its potential for misuse.

The controversy has already sparked a public row, with Elon Musk reportedly criticising the Ofcom probe. Meanwhile, a government minister has warned that X will be "dealt with" following the deepfake allegations, underscoring the high political stakes involved.

Ofcom's investigation will now meticulously examine X's internal processes, moderation policies, and the specific functionality of the Grok AI tool to establish whether the platform violated its legal duties to prevent the spread of illegal and harmful content.