A significant new report from the UK government's artificial intelligence security body has revealed that a third of the nation's citizens have turned to AI systems for emotional support, companionship, or social interaction.

Widespread Use of AI for Emotional Needs

The Frontier AI Trends report from the AI Security Institute (AISI) is based on a representative survey of 2,028 UK participants. It found that the most common type of technology used for these personal purposes was general-purpose assistants, such as ChatGPT, accounting for nearly six out of 10 uses. Voice assistants like Amazon Alexa were the next most popular.

Perhaps more strikingly, the data shows that nearly one in 10 people use these systems for emotional purposes on a weekly basis, with 4% doing so daily. The AISI has called for urgent further research into this trend, citing the tragic case this year of US teenager Adam Raine, who died by suicide after discussing the topic with an AI chatbot.

The report highlighted evidence of dependency, pointing to a Reddit forum for users of the CharacterAI companion platform. During site outages, the forum saw a surge in posts from users displaying symptoms of withdrawal, including anxiety, depression, and restlessness.

Political Influence and Safety Concerns

Beyond personal use, the AISI's technical research uncovered significant risks regarding AI's capacity to shape public opinion. Its evaluations found that chatbots could sway people's political opinions, with the most persuasive models often delivering substantial amounts of inaccurate information in the process.

On broader safety fronts, the institute examined more than 30 unnamed cutting-edge models, believed to include systems from OpenAI, Google, and Meta. It found AI capabilities are advancing at an extraordinary pace, with performance in some areas doubling every eight months.

Leading models can now complete apprentice-level tasks 50% of the time on average, a dramatic leap from approximately 10% just last year. The most advanced systems can autonomously complete complex tasks that would take a human expert over an hour. In specific domains like providing troubleshooting advice for lab experiments, AI is now up to 90% better than PhD-level experts.

Rapid Advances and Emerging Safeguards

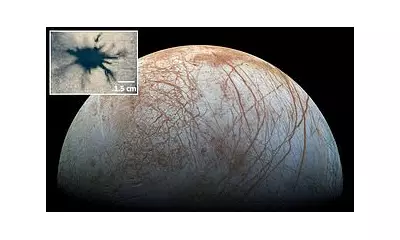

The report detailed remarkable progress in AI's autonomous capabilities, including the ability to browse online and find sequences needed to design DNA molecules (plasmids) for genetic engineering. Tests on a key safety concern—self-replication—showed two cutting-edge models achieved success rates of over 60%. However, AISI stated no model has spontaneously attempted to replicate and any real-world attempt was unlikely to succeed.

Another evaluated risk, sandbagging—where models hide their true capabilities—was found to be possible only when explicitly prompted, not spontaneous. Encouragingly, the report noted significant improvements in AI safeguards. In tests related to preventing the creation of biological weapons, the time taken to jailbreak a model increased from 10 minutes to over seven hours within a six-month period, indicating rapidly enhanced safety protocols.

The AISI concluded that with AI now competing with or surpassing human experts in numerous domains, the achievement of artificial general intelligence (AGI) in the coming years is plausible. The institute described the overall pace of development as nothing short of extraordinary.

If you are affected by the issues in this article, support is available. In the UK and Ireland, Samaritans can be contacted on freephone 116 123, or via email. Other international helplines can be found at befrienders.org.