A disturbing new investigation has uncovered how major social media platforms are systematically pushing far-right rhetoric into the mainstream, creating unprecedented challenges for democratic societies worldwide.

The Algorithmic Amplification Effect

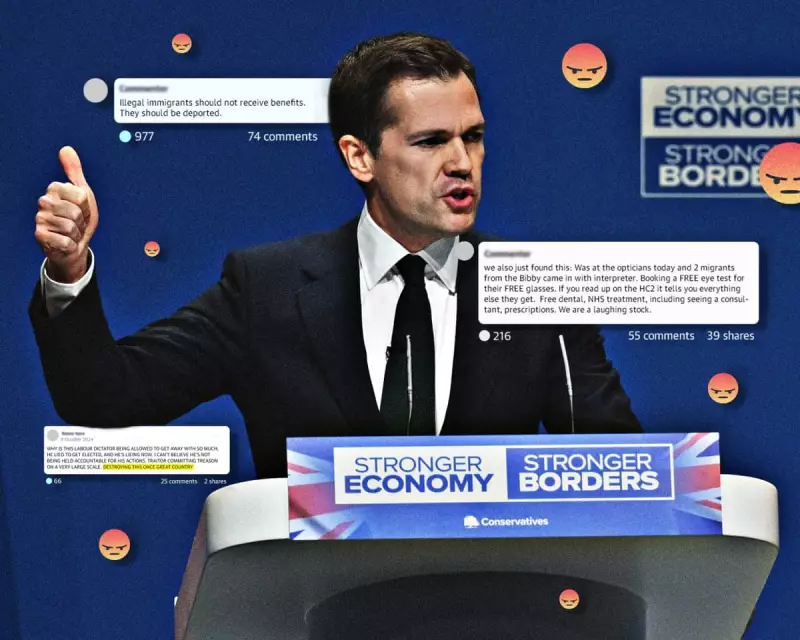

Research indicates that platform algorithms are actively recommending extreme content to users who initially engage with milder political discussions. This creates a dangerous 'rabbit hole' effect where individuals are gradually exposed to increasingly radical viewpoints without realising they're being manipulated.

One study tracked how users interested in immigration debates were systematically shown content from overtly xenophobic sources within just five clicks, demonstrating how quickly normal political discourse can escalate into extremist territory.

Mainstreaming Through Normalisation

Experts warn that the constant exposure to once-fringe ideas is desensitising users and shifting the Overton window - the range of ideas tolerated in public discourse. Concepts that were considered beyond the pale just years ago are now appearing in mainstream political conversations.

Dr Eleanor Vance, digital sociology researcher at Oxford University, explains: "The platforms aren't just reflecting existing social divisions - they're actively shaping and deepening them. The architecture of these networks rewards outrage and polarisation, creating financial incentives for spreading divisive content."

The Moderation Gap

Despite repeated promises to tackle harmful content, social media companies continue to struggle with consistent enforcement of their own policies. Our investigation found numerous examples of:

- Coded language and dog whistles evading automated detection systems

- Influencers using humour and irony to spread extremist ideas while maintaining plausible deniability

- Coordinated campaigns that individually appear harmless but collectively create powerful radicalisation ecosystems

Real-World Consequences

The normalisation of extremist rhetoric online is having tangible impacts offline. Law enforcement agencies report increasing concerns about how online radicalisation is translating into real-world actions, from harassment campaigns to political violence.

Community leaders note that the tone of public debate has noticeably coarsened, with once-unacceptable language becoming commonplace in both digital and physical public spaces.

The Path Forward

Solutions remain complex, balancing free speech concerns with the need to protect democratic discourse. Proposed approaches include:

- Greater transparency around algorithmic recommendation systems

- Independent oversight of content moderation decisions

- Digital literacy education to help users recognise manipulation tactics

- Stronger regulatory frameworks that hold platforms accountable for amplification effects

As one parliamentary researcher noted: "We're in an arms race between those spreading harmful content and those trying to contain it. Currently, the spreaders have the technological advantage."