In a significant development for social media governance, YouTube has reached a confidential settlement in a high-profile lawsuit that accused the platform of amplifying Donald Trump's election fraud claims in the lead-up to the January 6th Capitol attack.

Platform Accountability Under Scrutiny

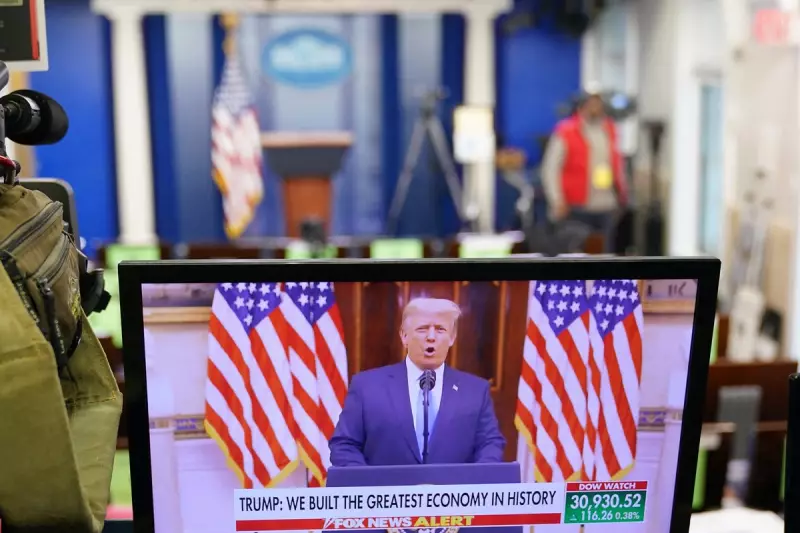

The legal action, filed by a California police officer and a Democratic congressman, alleged that YouTube's algorithm actively promoted content containing baseless election fraud allegations during the critical period before the insurrection. The plaintiffs argued this created what they termed a "digital mob" that ultimately contributed to the violence at the US Capitol.

The Core Allegations

The lawsuit centred on several key claims:

- YouTube's recommendation system preferentially promoted content featuring Trump's election fraud claims

- The platform failed to adequately moderate dangerous content despite clear warning signs

- This algorithmic amplification created an environment conducive to radicalisation

- Platform policies were inconsistently applied to political content

Broader Implications for Social Media

While the specific settlement terms remain confidential, the case raises fundamental questions about the responsibility of tech giants in moderating political content. Legal experts suggest this settlement could establish important precedents for how platforms handle content from political figures during volatile periods.

The outcome comes amid increasing global scrutiny of social media platforms' role in political discourse and their ability to prevent the spread of misinformation.

Section 230 Considerations

This case tested the boundaries of Section 230 of the Communications Decency Act, which typically shields online platforms from liability for user-generated content. The plaintiffs attempted to argue that YouTube's algorithmic recommendations transformed it from a passive platform to an active content promoter.

The settlement avoids a definitive court ruling on this crucial legal question, leaving the interpretation of Section 230's application to algorithmic content for future cases.

Industry-Wide Impact

Content moderation experts suggest this settlement may prompt platforms to:

- Re-evaluate how algorithms handle political content during election periods

- Implement more transparent moderation policies for high-profile accounts

- Develop clearer protocols for handling content from political leaders

- Increase investment in election integrity measures

The resolution of this case occurs as multiple social media platforms continue to grapple with their role in political discourse and the balance between free speech and content moderation.