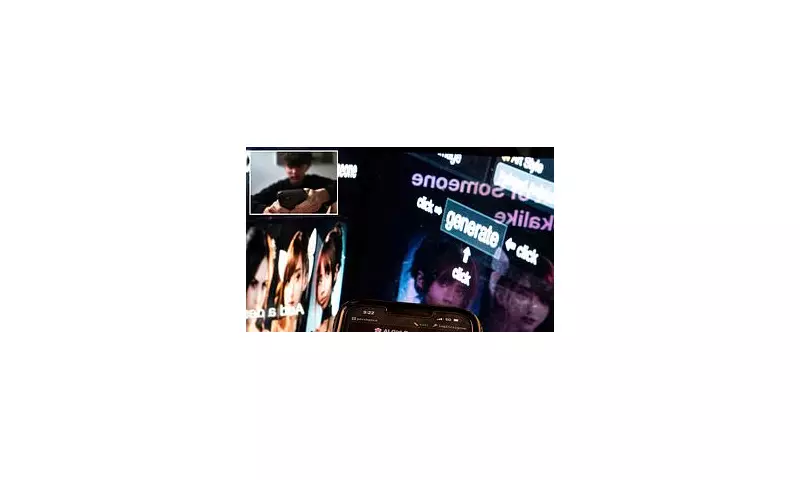

Alarming new reports have exposed a disturbing trend among teenage boys in the UK: the use of artificial intelligence applications to generate explicit fake images of female classmates and even teachers. These so-called 'nudifying' apps employ sophisticated deepfake technology to digitally remove clothing from ordinary photos, creating highly realistic but entirely fabricated nude images.

The Dark Side of Accessible AI

What makes this phenomenon particularly concerning is the ease with which these apps can be accessed and used. Many are available through mainstream app stores or websites, requiring no technical expertise. Experts warn this represents a dangerous new frontier in cyberbullying and harassment, with potentially devastating consequences for victims.

Schools on the Frontline

Educational institutions across the country are reporting increasing incidents involving these AI-generated images. Teachers describe a growing problem where students share these fake nudes through messaging apps or social media, often leading to severe emotional distress for the targeted individuals.

Legal and Ethical Quagmire

The legal framework struggles to keep pace with this emerging threat. While creating and sharing such images of minors could potentially violate child pornography laws, enforcement remains challenging. Many apps operate from jurisdictions with lax regulations, making them difficult to shut down.

Key concerns raised by child protection experts:

- The psychological impact on young victims of this digital violation

- The normalization of non-consensual image sharing among teenagers

- The lack of effective tools for schools to detect and prevent this abuse

- The urgent need for tech companies to take responsibility for harmful AI applications

As this troubling trend continues to spread, parents, educators and policymakers face mounting pressure to address both the technological and societal factors enabling this new form of digital exploitation.