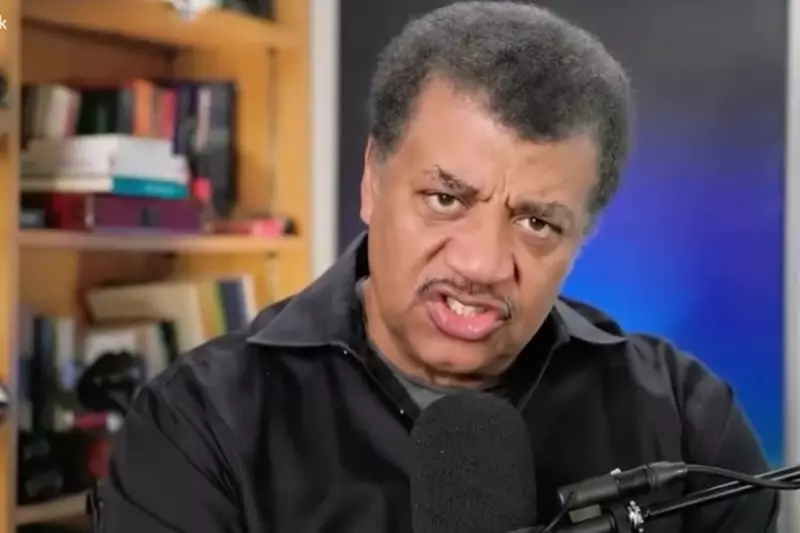

In a startling demonstration of artificial intelligence's dark potential, renowned astrophysicist Neil deGrasse Tyson has become the latest high-profile victim of a sophisticated deepfake attack. A fabricated video showing Tyson apparently endorsing flat Earth conspiracy theories has been circulating online, catching both scientists and social media users off guard.

The Viral Deception

The convincing deepfake portrays Tyson delivering an impassioned monologue supporting the long-debunked flat Earth hypothesis. Using advanced AI technology, the creators have perfectly mimicked Tyson's distinctive vocal patterns, facial expressions, and mannerisms, making the fabrication difficult to distinguish from genuine content at first glance.

"This represents a new frontier in scientific misinformation," digital forensics expert Dr. Evelyn Reed told The Independent. "When trusted scientific voices can be so convincingly impersonated, it undermines public understanding of established facts."

Science Communication Under Fire

Neil deGrasse Tyson, director of the Hayden Planetarium and one of the world's most prominent science communicators, has built his career on debunking pseudoscience and promoting scientific literacy. The deepfake's creators have deliberately targeted his reputation, using his credibility to lend false authority to anti-scientific claims.

The incident highlights growing concerns within the scientific community about how AI technology could be weaponised against evidence-based discourse. Researchers worry that such sophisticated forgeries could erode public trust in scientific institutions and experts.

The Growing Threat of AI Manipulation

This isn't the first instance of AI being used to create misleading content, but it represents one of the most sophisticated attacks on a scientific figure to date. Cybersecurity experts note that the technology required to create such convincing deepfakes is becoming increasingly accessible to bad actors.

"We're entering an era where seeing is no longer believing," warned Dr. Marcus Thorne, who specialises in digital ethics at University College London. "The public needs to develop new critical thinking skills for verifying online content, especially when it comes from seemingly authoritative sources."

Fighting Back Against Digital Deception

Social media platforms and technology companies are racing to develop detection tools that can identify AI-generated content. However, the pace of technological advancement often outstrips these defensive measures.

Tyson's team has issued statements confirming the video's artificial nature and urging the public to verify scientific information through official channels. The incident serves as a stark reminder that in the digital age, even our most trusted voices can be hijacked by sophisticated technology.

As AI continues to evolve, the battle between creation and detection of synthetic media is likely to intensify, raising fundamental questions about truth, trust, and reality in our increasingly digital world.