A damning new study has revealed that facial recognition technology, widely used by law enforcement and private firms, exhibits significant racial bias, disproportionately misidentifying individuals from Black, Asian, and minority ethnic backgrounds.

Key Findings of the Report

The research, conducted by leading AI ethics experts, found that error rates for people of colour were up to 10 times higher than for white individuals. This alarming disparity raises serious questions about the fairness and reliability of these systems.

Real-World Consequences

In practical terms, these flaws mean that:

- Innocent individuals are more likely to be wrongly flagged by surveillance systems

- Law enforcement decisions may be influenced by biased algorithmic outputs

- Marginalised communities face disproportionate scrutiny

Industry Response and Calls for Regulation

Tech companies developing these systems have acknowledged the issue but argue that improvements are being made. However, civil rights groups are demanding:

- Immediate transparency about system accuracy rates

- Independent audits of facial recognition algorithms

- Stricter regulations on deployment, particularly in policing

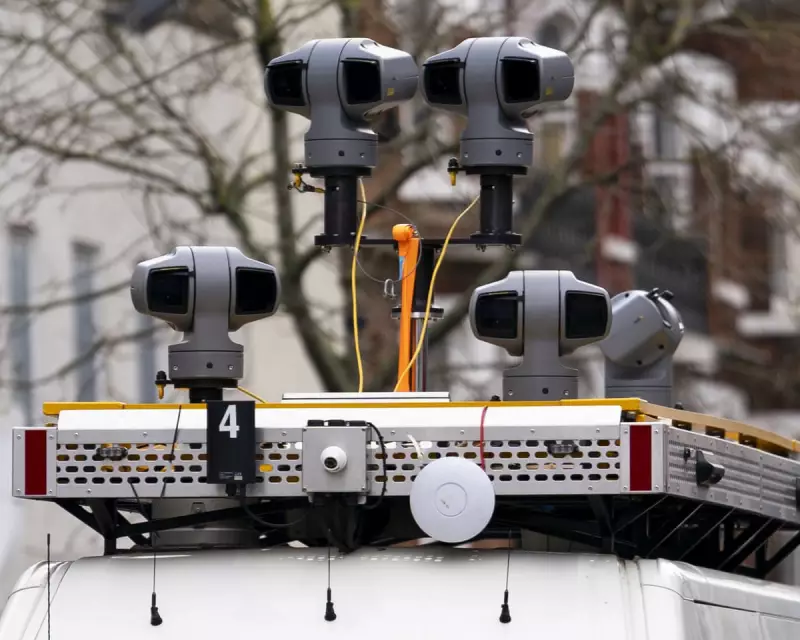

The findings come as several UK police forces expand their use of live facial recognition technology, despite ongoing concerns from privacy advocates.

The Path Forward

Experts suggest that addressing these biases requires:

- More diverse training datasets

- Rigorous testing across different demographic groups

- Clear accountability mechanisms for when systems fail

As the debate continues, one thing is clear: without significant changes, facial recognition technology risks entrenching existing inequalities in our justice system.